Surface Computing Technology

Technology has been taking new turns into the future

and so has the mode of interaction become very specific. Human-Computer interaction has brought forward more obstacles and it

has become a necessity to satisfy the user’s expectation.

Therefore, this paper provides a comparative study of Flat

surface computing interface and nonflat surface computing

interfaces which mainly focuses on various ideologies that are

taken into account. It reflects on certain aspects such as

visibility techniques in both flat and nonflat surfaces, the all

round view.

Computers allow us to have multiple applications done in

multiple windows. Use only one keyboard and one mouse

and only one person can do work at a time. If we want to

watch photos on our computer along with three or four other

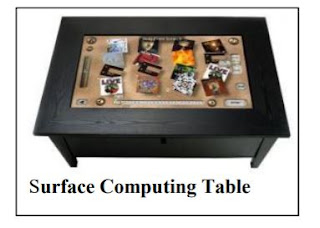

people, just imagine everyone trying to see them. Microsoft

introduces surface computing, Microsoft Surface allows people

to sit across in different positions and watch the images.

Spread the photos across the Microsoft Surface and anyone

can pull photos towards them like you pull physical photos,

with fingers. The name Surface comes from “surface

computing” and Microsoft envisions the coffee-table machine

as the first of many such devices. It uses many wireless

protocols. The table can be built with a variety of wireless

transceivers, including Bluetooth, Wi-Fi, and (eventually)

radio frequency identification (RFID) and is designed to sync

instantly with any device that touches its surface. It supports

multiple touch points – Microsoft says "dozens and dozens" --

as well as more than one user simultaneously, so more

people could be using it at once, or one person could be doing

so many tasks.

Surface Computing can be implemented in both Flat and Non

Flat Interfaces Flat surfaces are more user-friendly and

commonly used these days. Nonflat surfaces are one for the

future still under study. The design of nonflat surface

interfaces is a very complex task. Human gestures play a

pivotal role in Surface computing. The Surface's 30" touch screen is used

without a mouse and keyboard is large enough for a group

participation.

The most common and popular type of surface

computing is that of touch screen monitors of the type that can

be found on many modern phones. This is very common in

many businesses where untrained workers are expected to use

a computer.[2]Until recently though these touch screen

monitors were really little more than a replacement of the

mouse. We could still only point at one thing at a time; and it

wasn't even as good as a mouse because you can't right click

or highlight things without using a keyboard .

HOW IT WORKS

1. Screen: A large horizontal “multitouch” screen is used ,

The Surface can recognize the objects by reading coded

“domino” tags.

2. Projector: The Surface uses a DLP light engine found in

many rear projection HDTV's. The foot print, of the visible

light screen (1024 x 768 pixels) Projector. Wireless

Communication is used here

3. Infrared (IR cut filter): The surface uses an 850-nm light source.

4. CPU: Core2Duo processors 3GB of RAM, 500MB

graphics, The same configuration used in our everyday

desktop computer.

5. Camera (IR pass filter): The camera is used to the

capturing the process, then. Images are displayed onto the

underside of the screen then. Hand gestures play an important

role. Fingers, hand gestures, and objects are visible through

this screen to cameras placed underneath the display. Using an image processing system we process the image which

detects, objects, and fingers such as paintbrushes. These

types of objects are then recognized and the correct application

begins running. It is a computer with a different feel & looks.

Touch Screen Concept

Using Infrared cameras we sense objects, hand gestures , and finger touch. Using a Rare projection system which

displays on to the underside of a small thin diffuser. Objects

like fingers are visible through the diffuser by series of

Infrared cameras positioned to underneath the display. The Objects recognized are reported to the applications

running in the computer so that they can react to objects' shape,

touch and movement of the finger.

For example, Consider a screen when the fingers are inserted

onto the screen the place where the fingers were pointed gets

shadow and the remaining places are usually generate a light.

It is processed by the Infrared cameras and infrared rays of

light are project under the table and, then reflect by the

fingertip. The IR light changes are then processed by the

webcam and sent to the software.

Please Share, Like and Comment

Comments

Post a Comment